Interactive databate #buildTheNews

Times & Sunday TimesUrl↗ | Youtube

Stack: Ruby on Rails, popcorn.js, Bootstrap, HTML, CSS, Heroku, Git, GitHub,

at Times and Sunday Times #buildTheNews hackaton

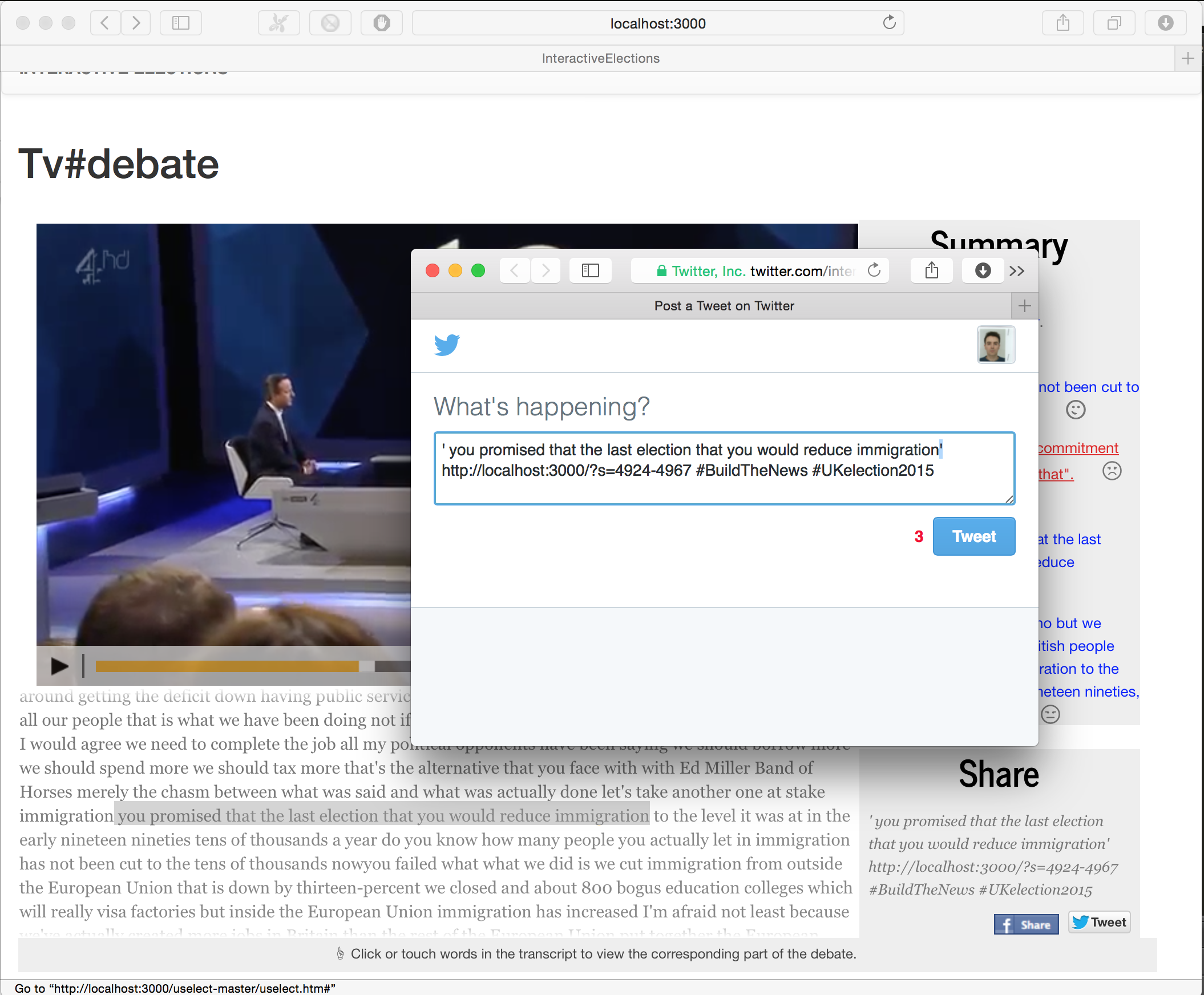

For the Times Build the news hackathon we decided to do system that given a video would generate transcription, identify the different speakers, provide summary of main topics and keywords as well as emotional charge of the speaker.

We decided to use the upcoming elections debate as a use case, but this could be generalised to other interview based video content.

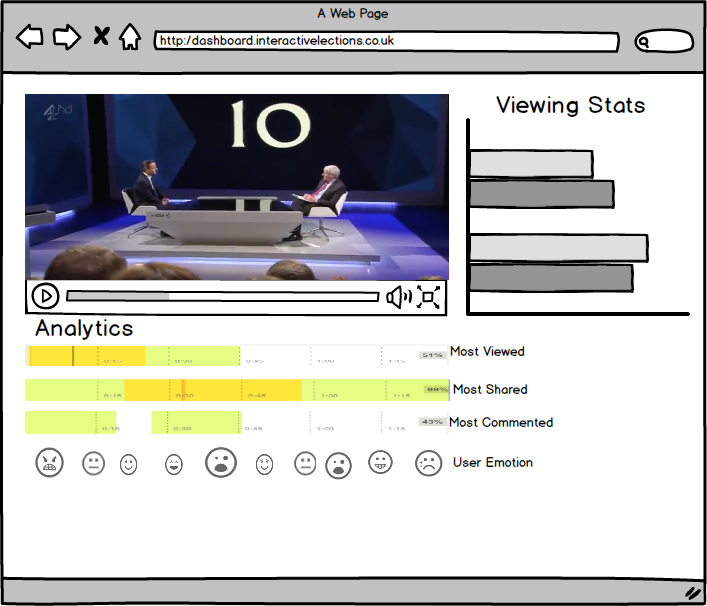

And provide a analytics dashboard for the journalist to view a heat map of:

- most viewed

- most commented

- most shared

- emotions

of the viewers engagement with the piece.

This we felt is also very important. For instance, if you are a journalist writing on that debate looking at this data could give you clues about what your readers are already thinking about it, and make it easier to engage in a conversation them in your next article. As well as other possible use cases where analytics of this kind would be useful.

The Concept

In terms of the analyses of the video based interview the ambition was that of using existing technology a round natural language processing, and sentiment analysis technology to give an insight into the subtext of an interview.

on the morning of the hackathon I was reading through the New York Times innovation report for some inspiration, when it mentioned that very often interactive project for the news are done as a one off, and that a more sustainable approach would be to create structures where form and content could be separated to make it reusable.

Technology used

We drew on a number of open source technology, APIs and libraries

We used ruby on rails and bootstrap as underlying framework, as well as HTML, CSS, and javascript for the front end.

But considered the tight turnaround we didn’t have time to integrate the various API with our system, so we decided to treat it like an R&D project (Research and Development) where you find all the components and make sure they integrate before actually building the product. So we processed all the various system calls one at a time passing the output a round between the various components , and then hardcoded an interactive high fidelity mockup. And all that was left then was to bring it all together into a full fledge application.

These are some of the technologies we used:

- Speech to Text (Youtube Captioning)

- Speaker diarization (Lium - same library used by BBC)

- Sentiment Analysis Topic & Keywords (Monkey Learn )

- Summarization (Text Summarization API )

- Srt → HTML (library)

- Social media Share (library)

- Interactive transcriptions , using popcorn library . from opensource Aljazeera US Election Debate Hyperaudio .

photo credit @MattieTK